Quality Gates And Their Importance

Introduction: What Are Quality Gates?

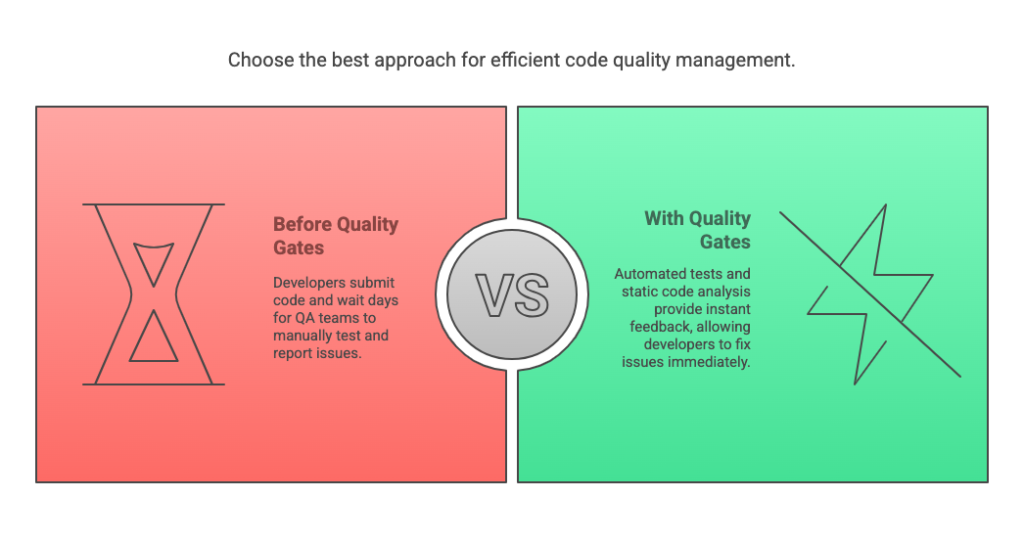

We are living in a time where everything is all about speed. We even need groceries in just 10 minutes delivered to our door steps. Same goes to for the software development today, which moves at lightning speed. Agile, DevOps, and CI/CD pipelines have made software releases faster than ever. But with speed comes risk and with comes a question, how do we ensure quality without slowing down the delivery? Let’s dive more into Quality gates and their importance in this article.

The first answer comes to our mind is Testing , right? But to make sure Testing does not become bottleneck for this speed is to spread it right and left of the development process. That’s where the terms quality gates comes into the picture.

A quality gate is a predefined checkpoint in the Software Development Life Cycle (SDLC) that ensures specific quality criteria are met before moving to the next phase. Think of it as a security check at an airport. If you don’t meet the requirements, you can’t proceed.

But look at it with another perspective that these quality gates also give faster feedback on quality of the application at various point of SDLC

Quality gates help teams catch defects early, prevent costly fixes, and maintain a high standard of software quality. They act as automated or manual validation points that ensure only high-quality code moves forward.

Importance of Quality Gates

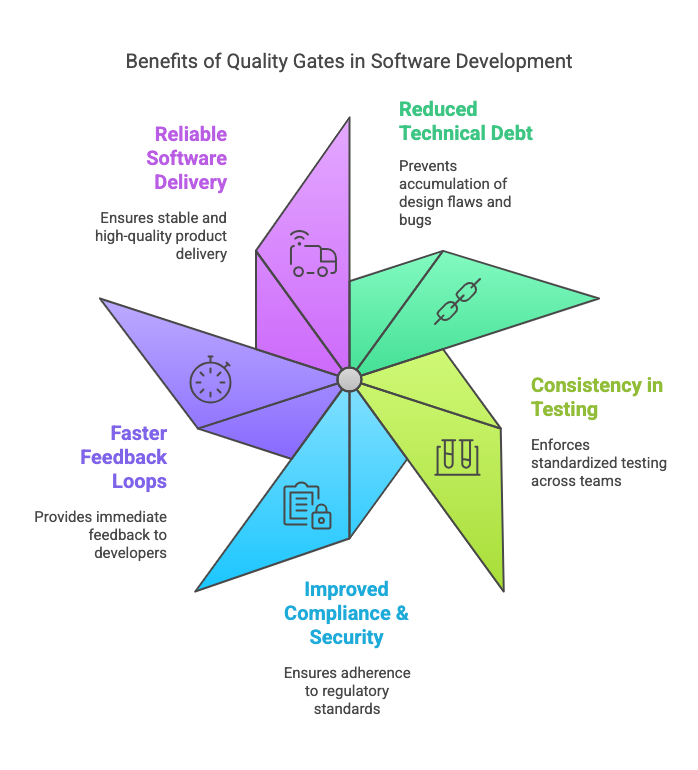

Why should we implement quality gates in our software development process? Here are a few of many Importance of Quality Gates:

1. Prevention Over Cure: Catching Defects Early Is Much Cheaper Than Fixing Them Post-Release

Fixing a bug early in the development cycle is exponentially cheaper than addressing it after release. According to studies like those by the IBM System Science Institute, the cost of fixing a defect increases significantly as it progresses through the SDLC.

Suppose if it cost $1 to find a gap in requirement phase, it will cost $5 to make this change in development phase, if it goes to QA it might take $10 to fix the same as it will cost time to raise bug and get it fixed and if it goes to production then the cost of this can be from $100 to much more depending on the impact of the issue.

This is because defects found later require more effort, debugging time, hotfixes, and potential patches, often leading to downtime, customer dissatisfaction, and revenue loss.

Let’s understand with a more practical example:

Imagine a banking app that miscalculates interest due to a small rounding error in the code. If caught during unit testing, fixing it might take minutes. However, if the issue reaches production, incorrect balances may affect thousands of users, requiring complex rollbacks, compensations, and reputational damage control.

2. Reduced Technical Debt: Preventing the Accumulation of Poor Design, Bugs, and Performance Bottlenecks

Many times teams need to prioritise speed over quality to meet promised deadlines and deliveries. But this might compromise quality of the application at many levels, accumulating poorly structured code, inefficient logic, or missing documentation. Over time, this debt slows down development, increases maintenance costs, and makes new feature development riskier.

Quality gates act as checkpoints to ensure that:

- Code follows best practices.

- Performance and scalability considerations are addressed early.

- Security vulnerabilities don’t accumulate.

For Example, A startup may push quick-and-dirty code to meet deadlines. Without quality gates, unoptimized database queries, duplicated code, and lack of modularity may become a major roadblock six months down the line, requiring significant rework before scaling.

3. Consistency in Testing: Standardized Practices Across Teams

When different teams follow different testing approaches, it leads to:

- Inconsistent test coverage (some features tested well, others barely tested).

- Missed critical defects due to gaps in test planning.

- Unreliable test results, making defect tracking difficult.

Quality gates enforce standardized testing by ensuring that:

- All code undergoes unit and functional testing before merging.

- Regression suites are executed on every release.

- Non-functional aspects like security, performance, and accessibility are validated.

For Example, In large organizations, multiple development teams work on different microservices. Without common quality gates, one team might have strict automated tests, while another relies on manual verification, leading to uneven quality across the system.

4. Improved Compliance & Security: Ensuring Adherence to Standards and Regulations

Many industries, such as finance, healthcare, and government sectors, must comply with strict security and regulatory standards like:

- GDPR (General Data Protection Regulation)

- HIPAA (Health Insurance Portability and Accountability Act)

- ISO 27001 (Information Security Management)

- SOX (Sarbanes-Oxley Act for financial reporting)

Quality gates enforce compliance by ensuring:

- Code is reviewed for security vulnerabilities.

- Data handling follows legal requirements.

- Encryption, access controls, and audit logs are in place.

A healthcare software company must ensure patient data encryption. A quality gate that automatically scans code for unencrypted sensitive information can prevent compliance violations and costly legal penalties.

5. Faster Feedback Loops: Immediate Feedback to Developers

Quality gates, especially automated ones like linting, static code analysis, query performance, unit test and ui sanity tests, help developers get quick insights into issues, reducing delays in fixing defects.

For Example, A developer commits code with an unoptimized database query. A quality gate in CI/CD immediately flags it due to high execution time. The developer fixes it before merging, preventing performance issues in production.

6. Reliable Software Delivery: Ensuring a Stable, High-Quality Product

Ultimately, all these benefits lead to one goal: delivering software that is stable, bug-free, and performs well for users.

- Customers trust the product more when it consistently works as expected.

- Fewer production defects mean less firefighting for engineering teams.

- Businesses avoid costly service outages and maintain their reputation.

For Example, A SaaS product used by thousands of businesses implements strong quality gates at every stage (code review, automated testing, performance checks, release validation). As a result, it rarely experiences downtime or security breaches, maintaining high customer satisfaction and retention rates.

Different Quality Gates and Their Roles in SDLC

Quality gates can exist at various stages of the SDLC. Ideally a quality gate can be added to any stage where any kind of quality control can be done, it can be as small as discussion in team to find gaps in requirement and design or it can be a full fledged API or UI regression of application.

Each gate has a specific focus and validation criteria. Let’s explore some widely used once quality gates on major checkpoint of SDLC and their importance:

1. Requirement Discussion Quality Gate

🔹 Purpose:

This quality gates ensures that requirements are clear, testable, and aligned with business needs. This usually take place in form of a collaborative discussion between all team, where requirement are discussed , explained, questions are asked and requirements are improvised if required.

🔹 Key Checks:

- Are requirements well-documented and unambiguous?

- Are acceptance criteria defined?

- Are testability and edge cases discussed?

🔹 How to Implement

- ✅ Define Acceptance Criteria – Ensure requirements are well-documented with clear, testable acceptance criteria.

- ✅ Review & Validate Requirements – Conduct requirement reviews with developers, testers, and business teams. Dev team helps in finding technical limitations, QA helps in pointing out edge cases and requirement gaps.

- ✅ Create Testable User Stories – Convert requirements into Gherkin syntax (Given-When-Then) for clarity. (If Applicable)

- ✅ Automate Requirement Traceability – Use JIRA, Azure DevOps, or TestRail to map requirements to test cases. That helps all stack holder including dev team to get tests for each requirement faster

2. Development / Design Quality Gate

🔹 Purpose:

There are actually 2 phases or type of quality gate that can be configured with development. First comes into picture when team is following TDD approach and tests (can be scenarios, use cases or charter ) curated by QA team used by dev team to develop the functionality. Second is dev testing.

🔹 Key Checks:

a. Pre commit or PR checks:

This can be either setup as pre-commit check or it can be configured to be run before PR merge. It depends on how team collaborate and what framework they want to follow.

- Unit Tests

- Component Tests

- API Contract Tests

- Static Code Analysis (Checkstyle, Sonar cloud etc)

🔹 How to Implement:

- ✅ Static Code Analysis – Enforce coding standards using automated tools.

- ✅ Peer Code Reviews – Implement mandatory pull request (PR) approvals in Git repositories.

- ✅ Unit Testing Automation – Require unit tests before merging code.

- ✅ Version Control Best Practices – Use Git Flow, feature branching, and commit message

b. Dev Testing:

This is effective when dev team actually handling a separate environment to make sure things are good before moving it to QA environment. Dev team can use high priority or critical tests prepared by QA team to make sure that atleast feature is in working condition.

Also having a dev environment might not be necessary, in today’s agile world many teams follow feature branch based deployment, where a team working on some feature branch deploy it on a temporary environment, QA team test it out. Dev testing can also be done on such environment. That plays a big role in saving time incurred when QA team raise bugs and do follow up.

3. Sanity/ Smoke/ Build Quality Gate

Although on surface we might think all 3 of them serve same purpose, which is to check if the new build that is pushed to QA for testing is ready for functional testing. Why this is necessary? Let’s think of it as a check that will save a lot of time for dev and QA, wasted by unnecessary bugs.

But these 3 actually have different purpose as quality gates:

a. Build Integrity Testing:

🔹 Purpose:

This is the very first of these 3 and executed immediately after a new build is generated and deployed to the test environment. It ensures the build itself is properly compiled, deployed, and ready for testing.

🔹 Key Checks:

- The build installs/deploys successfully on test environments.

- No missing files, dependencies, or incorrect configurations.

- Backend services, APIs, and databases are accessible.

- Versioning is correct (build number matches expected release).

- Logs show no major errors on startup.

🔹 How to Implement:

- ✅ Verify Build Deployment – Ensure the build installs or deploys correctly on the test environment.

- ✅ Check Configurations & Dependencies – Validate database connections, API integrations, and system settings.

- ✅ Log & Debug Issues – Capture and analyze build logs for errors.

b. Smoke Testing Gate

🔹 Purpose:

After the build test Integrity Test is passed, we need to ensures the build is stable enough for further testing. For that we executes smoke tests, if smoke tests fail, the build is rejected immediately.

🔹 Key Checks:

- Application launches successfully without crashes.

- Critical workflows (login, navigation, API responses) function as expected.

- No major UI or backend failures (e.g., broken pages, missing data).

- Database connections and API integrations work.

- No “showstopper” defects (crashes, 500 errors, missing dependencies).

🔹 How to Implement:

- ✅ Automate Smoke Tests – Run a minimal test suite covering critical workflows (e.g., login, navigation, API responses).

- ✅ Run in CI/CD Pipelines – Trigger smoke tests immediately after deployment.

- ✅ Fail Fast – If smoke tests fail, reject the build.

c. Sanity Testing Gate

🔹 Purpose:

Sanity testing checks if new features or fixes in a software build work correctly and if the build is stable enough for further testing. It is a quick test to make sure recent changes haven’t caused major issues. It is executed after a new fix or small update is applied to an existing build.

🔹 Key Checks:

- The specific bug fix or feature update works as expected.

- No related functionalities are broken due to the change.

- Core application features are still intact.

- UI elements and workflows are functional (buttons, forms, etc.).

🔹 How to Implement:

- ✅ Verify Recent Fixes – Execute test cases covering bug fixes and small feature updates.

- ✅ Manually Test Edge Cases – Perform quick manual verification if needed.

- ✅ Automate High-Impact Sanity Checks – Automate key scenarios for repeated sanity testing.

3. Functional Testing Gate

🔹 Purpose:

The Functional Testing Gate ensures that new features and changes behave as expected according to the business requirements. This is one of the most critical and widely used quality gates, as it validates the core functionality of an application before proceeding to further testing phases.

Unlike smoke or sanity testing (which provide a quick check for stability), functional testing is deeper and more detailed. It usually involves multiple types of testing,

🔹 Key Checks:

- Are all functional test cases executed?

- Are all core functionalities working as expected?

- Are edge cases and boundary conditions tested?

- Are all integrations between modules working?

- Are all reported defects triaged and resolved?

- Are negative and error scenarios tested?

🔹 How to Implement:

- ✅ Automate Functional Tests – Execute UI/API automated tests in CI/CD.

- ✅ Manual Exploratory Testing – Test edge cases beyond automation.

- ✅ Triage & Fix Defects – Ensure major issues are resolved before proceeding.

4. Regression Testing Gate

🔹 Purpose:

Regression testing should be done after all functional tests are done. The Regression Testing Gate ensures that new changes haven’t broken existing functionality. It acts as a safety net after functional testing, catching defects introduced due to recent modifications before the build moves forward.

🔹 Key Checks:

- Has the full regression test suite been executed and passed?

- Were any new high-priority defects introduced?

- Has an impact analysis been completed?

- Are test cases updated to cover new scenarios?

- Has backward compatibility been verified (if applicable)?

- Have integration points been tested?

- Have database migrations and schema changes been validated?

🔹 How to Implement:

- ✅ Execute Automated Regression Tests – Run comprehensive automated regression test suites.

- ✅ Impact Analysis – Identify affected areas before regression testing.

- ✅ Fix & Revalidate – Ensure all new defects are fixed before progressing.

5. Non-Functional Testing Gate

🔹 Purpose:

As the name suggest it test everything that is not functional. The Non-Functional Testing Gate ensures that the software is not only functional but also scalable, secure, and user-friendly. It validates aspects like performance, security, usability, and compliance, ensuring the system meets business and technical requirements beyond just functionality.

🔹 Key Checks:

- Is software able to handle the expected user traffic?

- Can infrastructure of application scale with increasing load?

- Does software ensure that user’s data is secure and encrypted.

- Does infrastructure ensure authentication, and vulnerability mitigation.

- Does application adhere to GDPR, HIPAA, ISO 27001, or PCI-DSS based on industry requirements.

- Is application tested with UI/UX for ease of use, responsiveness.

- Is it WCAG accessibility compliance. (If applicable)

- Is reliability and disaster recovery verified?

🔹 How to Implement:

- ✅ Automate Performance & Load Testing – Run load, stress, and endurance tests.

- ✅ Perform Security Scans – Identify vulnerabilities before release.

- ✅ Check Compliance & Accessibility – Validate GDPR, HIPAA, PCI-DSS standards.

6. UAT / Pre-Production Testing Gate

🔹 Purpose:

The User Acceptance Testing (UAT) / Pre-Production Testing Gate ensures that the software meets business requirements and end-user expectations before going live. It serves as the final validation from stakeholders, business users, or customers to confirm that the application is ready for production.

Unlike functional and regression testing, UAT focuses on real-world use cases, workflows, and user experience, ensuring that the system works as intended in an environment similar to production.

🔹 Key Checks:

- All UAT scenarios and real-world workflows have been executed and Business users, product owners, or end-users have tested the system.

- No blocker or critical business issues remain open and all UI/UX feedback from UAT is addressed.

- The application runs smoothly in an environment mirroring production.

- Are release notes, documentation, and user training in place?

- Is sign-off from business users and stakeholders received?

🔹 How to Implement:

- ✅ Involve Business Users – Conduct real-world scenario testing with stakeholders.

- ✅ Test in a Pre-Prod Environment – Use a production-like environment.

- ✅ Get UAT Sign-Off – Require business approval before going live.

7. Maintenance Gate

🔹 Purpose:

Shift left testing is trending but Tester need to shift right as well. This gate focuses on the period after software release to actively monitor how it’s performing in the real world and to address any bugs or issues that arise. It aims to ensure the software remains stable, functional, and meets user needs over time.

🔹 Key Checks:

- Production monitoring in place? Is there a system actively tracking the software’s health?

- Is there a process to gather feedback from users through various channels like reviews, support tickets, and surveys?

- Is there an ongoing process to track and respond to any security vulnerabilities that might be discovered after release?

- Are there regular reviews of the software’s performance to identify areas for optimization and improvements?

- Is the user documentation, help guides, and API documentation being kept up-to-date with any changes, bug fixes, or new features?

- Are the channels for user support, such as helpdesks, FAQs, and communities, operating effectively?

🔹 How to Implement:

- ✅ Monitor Post-Release Performance – Track system health metrics with tools like New Relic, Datadog, Splunk.

- ✅ Optimize Database & Server Configurations – Tune system settings if required.

- ✅ Test Software on Updated OS, Browsers, or Servers – Ensure compatibility with new environments.

- ✅ Validate Database Migrations – Ensure no data corruption occurs.

- ✅ Check Third-Party API & Service Integrations – Ensure external dependencies function as expected.

- ✅ Setup Production Monitoring – Ensure the software is up and running 24*7 with regular health checks and monitoring.

Challenges in Implementing Quality Gate

While quality gates are essential for maintaining high software quality, implementing them effectively comes with several challenges. If not managed properly, they can slow down development, create bottlenecks, or lead to resistance from teams.

Below are the key challenges:

🚧 1. Resistance to Change

🔹 Challenge:

- Developers, testers, and stakeholders may see quality gates as an extra layer of bureaucracy that slows down progress.

- Teams, that are used to fast releases may resist strict validation processes as they see them as bottleneck.

🔹 How It Impacts Software Development:

- Quality gates may be skipped or bypassed under time pressure. But lack of adherence to them reduces the effectiveness of testing and leads to defects slipping into production.

🔹 Possible Solution:

✔️ Educate teams on the benefits of quality gates (fewer production failures, reduced hotfixes).

✔️ Automate as much as possible to avoid manual bottlenecks and faster feedbacks.

✔️ Start small – introduce gates incrementally instead of all at once for example start with a quick smoke test integrated to Dev deployment pipeline.

🐌 2. Slower Development & Delivery Pipeline

🔹 Challenge:

- If not optimized, quality gates can introduce long wait times in CI/CD pipelines i.e. 4 hours of UI regression suite integrated as fast fail in deployment pipeline is never a good idea.

- Too many manual approval steps slow down software delivery.

🔹 How It Impacts Software Development:

- Delays in releases, leading to missed deadlines.

- Developers wait for test results or approvals, affecting productivity.

🔹 Possible Solution:

✔️ Prioritize automation – Automate as much as possible. Be it unit tests, API tests, UI Tests or component tests. Identify automation opportunity and do it.

✔️ Integrate with CI/CD– Use tools like Jenkins, GitHub Actions, or GitLab CI/CD for automated quality checks.

✔️ Parallelize testing – Run functional, performance, and security tests concurrently to reduce waiting times.

✔️ Define fast-track criteria – If small changes have minimal impact, allow quick approvals. Divide test suite in smaller groups and run them only for impacted areas.

🤖 3. Balancing Automation & Manual Testing

🔹 Challenge:

- Not all quality gates can be fully automated. Some require manual intervention (e.g., UAT, exploratory testing).

- Over-reliance on automation can miss usability issues that only humans can catch.

🔹 How It Impacts Software Development:

- Over-automating may lead to flaky tests, false positives, or maintenance overhead.

- Under-automating slows down the process, increasing the risk of human error.

🔹 Possible Solution:

✔️ Automate repeatable tasks (unit tests, API testing, regression suites).

✔️ Keep manual validation for critical areas (UX, exploratory, new features, UAT testing).

✔️ Use AI-powered test automation tools to reduce false positives.

🔗 4. Integration Complexity Across Tools

🔹 Challenge:

- Quality gates require integration between code repositories, CI/CD pipelines, test automation frameworks, security scanners, and reporting dashboards.

- Lack of proper integration leads to data silos, making it hard to track quality.

🔹 How It Impacts Software Development:

- Test results may not be visible across teams, reducing collaboration.

- Different tools may generate inconsistent quality reports.

🔹 Possible Solution:

✔️ Use unified DevOps platforms (Azure DevOps, GitLab, Jenkins) to manage testing, security, and deployments in one place.

✔️ Implement dashboard reporting tools (Allure, Report Portal, TestRail, SonarQube) for centralized tracking.

📌 5. High Maintenance Effort for Automated Quality Gates

🔹 Challenge:

- Automated quality gates require continuous maintenance to keep tests, security policies, and performance baselines up to date.

- Test scripts may become outdated or flaky as the application evolves.

🔹 How It Impacts Software Development:

- Frequent test failures due to outdated scripts slow down releases.

- Increased maintenance effort reduces testing efficiency.

🔹 Possible Solution:

✔️ Implement self-healing test automation to reduce flaky tests (tools like Testim, Healenium or Mabl).

✔️ Review and update test suites regularly to align with new features.

✔️ Use AI-driven test analytics to detect test failures automatically.

🛑 6. Lack of Ownership & Accountability

🔹 Challenge:

- No clear ownership of quality gates leads to inconsistencies in enforcement.

- Developers, testers, and DevOps teams may assume that someone else is responsible.

🔹 How It Impacts Software Development:

- Quality gates may be skipped or ignored if no one is accountable.

- Bugs may go undetected due to unclear responsibilities.

🔹 Possible Solution:

✔️ Assign clear ownership for each quality gate (e.g., developers handle unit test gates, testers handle functional gates, security teams handle security gates).

✔️ Use CI/CD policies to enforce mandatory quality checks before merging.

💲 7. Increased Cost of Implementation

🔹 Challenge:

- Setting up automated quality gates, integrating tools, and training teams requires time and investment.

- Small teams or startups may struggle with budget constraints.

🔹 How It Impacts Software Development:

- Some teams may skip quality gates to reduce costs.

- Lack of proper investment in testing tools increases defects in production, leading to higher costs later.

🔹 Possible Solution:

✔️ Start with open-source tools (e.g., SonarQube for code quality, OWASP ZAP for security).

✔️ Use cloud-based testing platforms instead of on-premise solutions.

✔️ Focus on high-impact quality gates first (e.g., Functional, Security, Regression).

📊 8. Measuring the Effectiveness of Quality Gates

🔹 Challenge:

- Organizations may implement quality gates but lack visibility into their effectiveness.

- Without proper tracking, it’s hard to tell if gates are improving software quality or just slowing down development.

🔹 How It Impacts Software Development:

- Quality gates may become “checkbox processes” without real impact.

- Teams may struggle to justify time and cost investments.

🔹 Possible Solution:

✔️ Define KPIs (Key Performance Indicators) such as:

- Time to fix defects – Are defects identified in quality gates are being resolved faster?

✔️ Use test analytics dashboards to measure trends and improvements.

- Defect detection rate – Are we catching issues earlier?

- Build failure rate – How often do quality gates fail?

Best Practices for Implementing Quality Gates

✅ Define Clear Criteria: Ensure every quality gate has measurable and enforceable criteria.

✅ Automate Where Possible: Use CI/CD pipelines to integrate automated quality checks.

✅ Continuous Monitoring: Track quality gate failures and refine processes.

✅ Enforce Gate Compliance: Ensure that all required checks are mandatory before progressing.

✅ Balance Speed & Quality: Optimize gate execution times to prevent bottlenecks.

✅ Train Teams on Quality Standards: Educate teams about the importance of quality gates.

Conclusion

Quality gates are the unsung heroes of software testing. They enforce discipline, maintain quality, and ensure that software meets high standards before moving forward. By implementing automated and manual quality gates at various SDLC stages, teams can reduce defects, improve reliability, and accelerate software delivery without compromising quality.

But it comes with its challenges and over doing it, might impact deliveries in terms of time and cost. It can become a bottleneck in future. To avoid that, start small, define your quality gates, automate where possible, and continuously refine your process—your future self (and your users) will thank you! 🚀

Shubhodaye

Holistic article!

I enjoyed reading this…